I'm a data hoarder. I love data. The simplicity of it, and the combined massiveness of it. I'm the guy that made sure that all of his songs had the right tagged info and had monthly backups of his work spanning back years. That takes me back. But data in itself isn't anything special. [1, 5, 7, 2] is some data I just made up. The representation is what matters. We can count it, we can group it, we can cross-reference it with other data and we can even use that calculus we learned that we never thought we'd ever use. So maybe those numbers I wrote mean something. We'll get to that later. So in this post, I'll touch on both the developer pros and woes that come with working with large amounts of data, and the more practical side which leads to those aha moments when you realize what the data means and some awesome people out there working to make this said data more public to the masses.

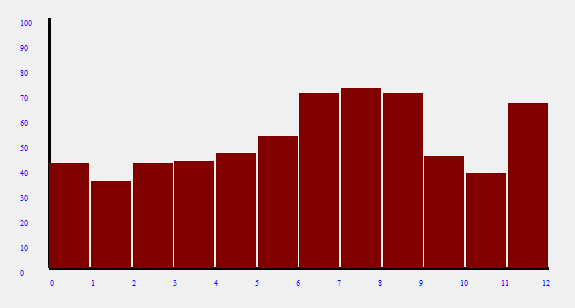

number of tasks completed on a website during my prime

number of tasks completed on a website during my prime

Capturing Good Data

The more meaningful data you have to work with, the more representations you can conclude from it.

Always plan ahead though. I can't count how many times at my previous job somebody wanted a report with a particular metric and I had to explain that the data simply wasn't being stored and that we had to start from zero. A few times though, there was enough basic data in the database that the new one could be reconstructed but you lose accuracy and developer time and it just isn't scalable in any way.

The biggest issue I've run across though comes with storing data. My old mentality used to be "We'll just store that too, not a problem". For small projects, not a big deal. For a high traffic website, kind of a big deal. 150GB later, we have a problem. Backups became expensive to perform, adding new indexes locks tables, inserts take time, across the board there were side effects of having such vast amounts of data that were actually never used for anything.

Personally, I like to run through the possible uses of data before I start to collect it. On an old website I have I used to track all page views and user actions. I didn't really use the data, I just liked having it just in case. 3 million records later, I realized I had absolutely no need for this data and running the "backup database" script on a shared server has its limitations.

Things to consider about data:

- The type of data being stored

- Hardware limitations (storage space, etc)

- Usability of data

Data Representation

Having the data is just step 1. As an example, I store views for each post on this blog. It's just a listing of the page and date. I can count it per post, and have a nice number of how many users have read a post. Which is a good datum to have, but it doesn't really give us much analysis on anything. However, grouping by day/month/year I can see the gradual popularity of each post in the past week or month. Which is definitely much more useful to me than knowing how many people read a single post, as there isn't much I can do about that.

Here's another quick example:

Random Crimes

| assault |

1/1/2014 |

33.901372, -118.284169 |

| arson |

1/2/2014 |

33.901372, -118.284169 |

| Jade Cat stolen |

??? |

33.901372, -118.284169 |

|

just some data I made up

You file a police report, it goes into some database that's who knows how old and we hear in the news "Crime is down overall 20% this year...". I'd wager that unreported crimes went up 20% that same year as well perhaps. So pretty boring data so far. However if you take a look at

CrimeMapping.com, you'll be in for a treat. A very dark look at society treat :/ . When I first heard of this site, I was blown away. Mainly due to the high amount of crime in my neighborhood, but also at the fact that it existed. You can follow trails of petty thefts in a week, or car break ins etc. You can see where crime has a stronger concentration and be pretty much blown away at what's going on in the world.

Data.gov

Data.gov is a government website created in 2009 with the purpose of making public government data, that isn't restricted. The data ranges from commerce, agriculture, jobs, research, oceanography and so on and so forth. At launch the site contained 40 datasets. To date, it hosts over 90,000 datasets. Now it may not be the most interesting data, "US Natural Gas Prices" or "Food Price Outlook", but again, it's not just the data, but the representation that matters. Somebody out there could take a sample of Natural Gas Price patterns in the past 30 years and find a pattern between that and the number of trees growing and correlate that more trees = more gas 0_o'. Or not.

I definitely hope to spend some time on data.gov in the near future and seeing where the data takes me.

An Awesome Data Project

SETI@home is a volunteer based computer sharing project that aids in processing a vast amount of data from radio signals and other scientific data in the search for extraterrestrial life. Currently there are over 5 million participants worldwide lending their processing power to the program, having aggregated over 2 million years of computing time (mind blown). To be a part of the project feel free to check out SETI@home.

Walter Guevara is a software engineer, startup founder and currently teaches programming for a coding bootcamp. He is currently building things that don't yet exist.